from portkey_ai import Portkey

import os

lb_config = {

"strategy": { "mode": "loadbalance" },

"targets": ({

"provider": 'openai',

"api_key": os.environ("OPENAI_API_KEY"),

"weight": 0.1

},{

"provider": 'groq',

"api_key": os.environ("GROQ_API_KEY"),

"weight": 0.9,

"override_params": {

"model": 'llama3-70b-8192'

},

}),

}

client = Portkey(config=lb_config)

response = client.chat.completions.create(

messages=({"role": "user", "content": "What's the meaning of life?"}),

model="gpt-4o-mini"

)

print(response.choices(0).message.content)

Implementation of conditional routing:

from portkey_ai import Portkey

import os

openai_api_key = os.environ("OPENAI_API_KEY")

groq_api_key = os.environ("GROQ_API_KEY")

pk_config = {

"strategy": {

"mode": "conditional",

"conditions": (

{

"query": {"metadata.user_plan": {"$eq": "pro"}},

"then": "openai"

},

{

"query": {"metadata.user_plan": {"$eq": "basic"}},

"then": "groq"

}

),

"default": "groq"

},

"targets": (

{

"name": "openai",

"provider": "openai",

"api_key": openai_api_key

},

{

"name": "groq",

"provider": "groq",

"api_key": groq_api_key,

"override_params": {

"model": "llama3-70b-8192"

}

}

)

}

metadata = {

"user_plan": "pro"

}

client = Portkey(config=pk_config, metadata=metadata)

response = client.chat.completions.create(

messages=({"role": "user", "content": "What's the meaning of life?"})

)

print(response.choices(0).message.content)

The above example uses metadata value user_plan To determine which model should be used for a question. This is useful for SaaS providers who offer AI through a freemine plan.

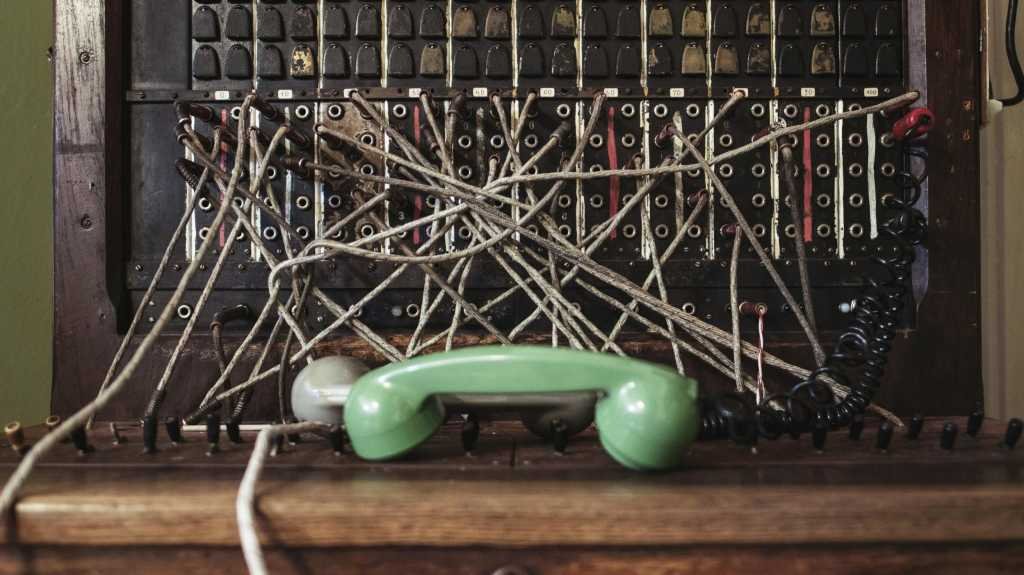

Using Portkey AI for LLM integration

Portkey representations have a significant innovation in LLM integration. It deals with critical challenges in managing multiple providers and performance optimization. By providing an open source code that allows for trouble -free interaction with various LLM providers, the project fills in a new gap in current AI development procedures.

The project is doing cooperation in the community, welcome contributions from developers around the world. With the active community of Github and open problems PortKey, it encourages developers to participate in the expansion of their abilities. Transparent project development and open source code licensing are accessible for both individual developers and business teams.